More data was created in 2011 than in all the years before that put together. Everyone has been talking about data these last few years, and the term Big Data, which made such waves in 2012 has turned out to be a major challenge a few years later. Connected devices, social networks, Open Data…the sources of data are multiplying, dangling in front of insurers the prospect of detailed knowledge of the expectations, behavior and risk appetite of policyholders and prospects. And all this has great promise: new products and innovative services, increasing individualization and access to predictive models. Yet, the manipulation of this data is not straightforward: for legal, technical and economic reasons. Therefore, the value in different sources of the data is variable. So, in order to conduct data science effectively, do you need to take account of all the data sources or choose a selection?

An informational asset to be exploited

Available databases are increasingly plentiful and full. However, the insurance sector suffers from a lack of information. Indeed, under the traditional model, an insurer is unable to determine why two people of the same socio-professional grouping act differently. Actuarial pricing models often take into account four or five indicators. In order to extract information that adds value, it is necessary to identify groups of tenuous indicators by cross-referencing multiple data sources in order to find positive correlations and adapt services accordingly.

Before starting, a clear vision of the project plan and the business objectives of the project will be needed. What exactly is the challenge of the Big Data transformation? Where should you start? And what is the right path to follow to generate added value? Above all, to follow the process through to the end and to not abort the project along the way.

Insurance: a sector experiencing major change…

The insurance sector is undergoing unprecedented change. Regulatory pressures, changes in consumer behavior and technological advances, as well as socioeconomic changes, are pushing insurance to seek new growth opportunities, and new questions are being raised on its value proposition and its social and economic role. Beyond digital, the value of data is core to the solution and represents a lever for this inevitable transformation.

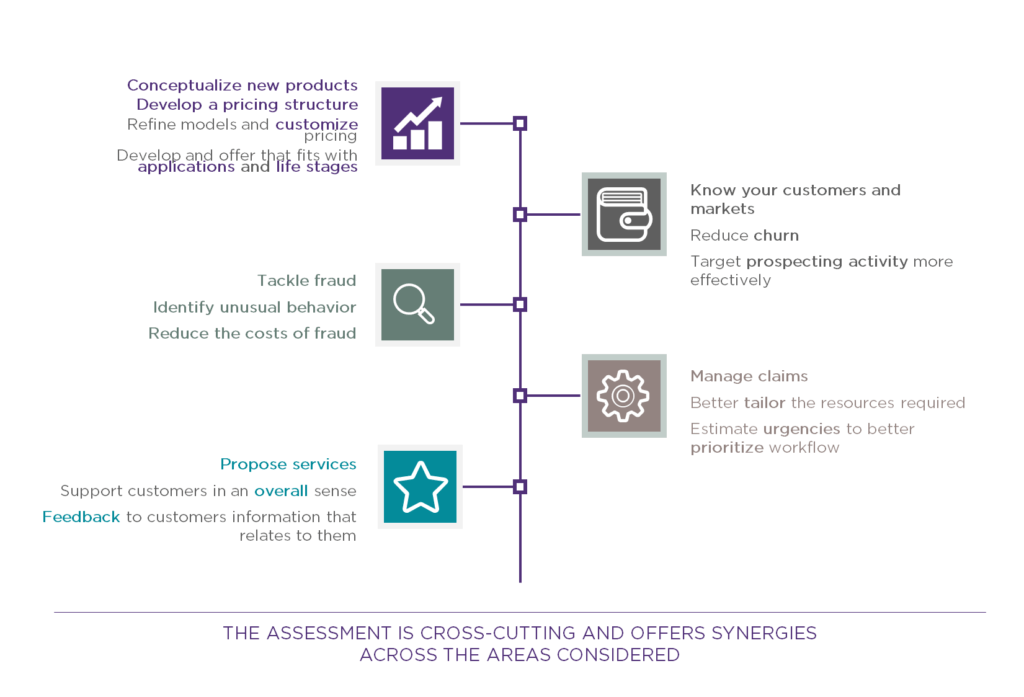

Looking at the opportunities from data in the round, Big Data offers a chance to examine the entire insurance value chain from the design of new products and customer knowledge to claim management and proposals for new services. In this context, repositioning requires an understanding of customer needs in the broadest sense, and not just product-by-product. Below are some examples of the impact of the benefits of data to the insurance value chain.

According to the same data sources, finding the right answers, in terms of needs, can be achieved by simplifying the analytical approach to consider significant life events for those insured while remaining vigilant on false positives. In concrete terms, one source of data can be used to serve several purposes and, therefore, fulfill different needs. Simplifying the research also requires a detailed knowledge of these major life-events, in order to be in a position to offer new value propositions at the right time, and anticipate changes in customer behavior. By way of example, data from social networks could first be used to get to know the customer better, and then reused in the context of a counter-fraud initiative. An accident report submitted by two people who are friends on Facebook casts doubt over the credibility of the claim. In addition, collecting data via cookies on the internet or social networks, or predicting the house moves by customers, feeds through to predicting cancellations of home insurance, and, therefore, allowing marketing actions to be better targeted to reduce churn.

In this sense, collecting information about customers will be beneficial for insurers provided that they can make use of it properly and filter out false positives by selecting the appropriate data to analyze. The starting point for the selection must be a comprehensive view of the possible data sources and their potential for different parts of the value chain. Consequently, the data should not be organized in silos, but as a whole, in order to serve the various relevant processes. Moreover, the role of the chief data officer is to identify the pertinent data for the various functions, and to help determine how it can best be used.

… as it responds to the threat of new entrants:

More than a challenge, this transformation is currently a necessity for insurers. Otherwise, the imminent arrival of the GAFA (Google, Apple, Facebook, and Amazon) players in the market, or the effects of the uberization of economies, will skew the field in favor of those companies closest to, and having the most interactions with, their customers. In addition to their expertise in data analysis and their capacity to use personal user information as a catalyst to provide high value-added services, the GAFA players have diversification capacity coupled with considerable financial power. Moreover, Google has already invested $32.5 million in the innovative health insurance start-up, Oscar Health.

Aside from the GAFA players, other actors seeking to position themselves as innovative may also pose a threat to traditional insurers. The start-up company, The Climate Corporation, purchased by Monsanto, is a good example of this. It offers a new insurance service model to farmers and requires a strict agricultural process to be followed. The Climate Corporation is a technological platform which, through the analysis of agricultural data and weather simulations, helps farmers to improve their operations and anticipate weather risks. Any event that is poorly predicted leads to a compensatory payment for the farmer.

In summary, to safeguard the viability of their businesses, insurers would do well to use Big Data as a means of “disruptive” repositioning, meeting customers’ needs by proposing new products and services.

The “Think big, try small” approach:

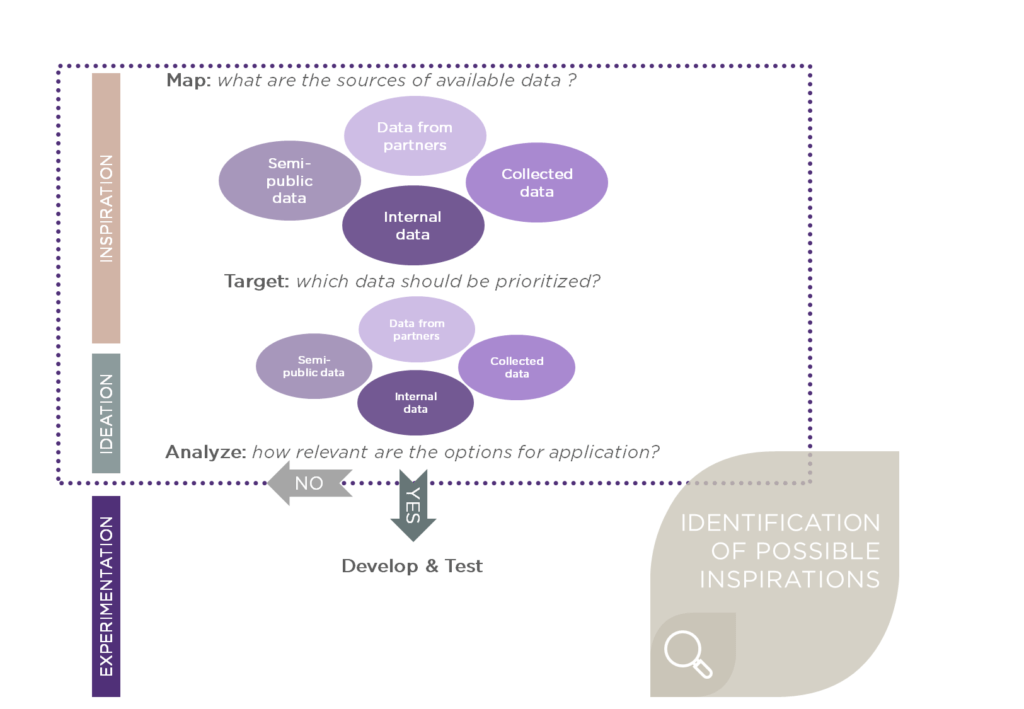

In order to attempt such a transformation and exploit the value of data, it is in insurers’ interests to advance in small steps, entailing little financial outlay and bringing controlled results. Many data sources are available; they might come from internal data, connected devices, social networks, partners or semi-public agencies like SNIIRAM (the French National inter-plan information health insurance system). Currently, these sources are either scarcely utilized, or badly utilized. To exploit their value and bring to market new products that are adapted to the transformation taking place, the concept must follow a structured, iterative process in four key stages.

• Mapping: Identify the available data sources – with no preconceived ideas.

In order to identify all the potential sources of data in the most comprehensive manner possible, judgments must be made against the scenarios for possible use. Conversely, a comprehensive assessment of all data sources lends itself to generating the most disruptive concepts. To this end, Wavestone has set up Creadesk to support its clients through such changes and encourage the development of innovative ideas. Creadesk is effectively a collection of resources (process tools, actual or virtual work locations, and experienced personnel) aimed at promoting the co-creation of innovative ideas and the development of POCs (Proofs of Concept).

• Target: Identify and prioritize internal and external data sources.

Once the data sources have been mapped out, a priority order can be created using two levels of analysis. The first level consists of prioritizing the data via a SWOT matrix (Strengths, Weaknesses, Opportunities, and Threats) for each data source. The second level involves a more detailed analysis that aims to determine the extent to which it can be made use of, and then the potential in each source. In order to ascertain the usability of a source of data, the cost of accessing it, its acquisition costs, its structure and its reliability must all be taken into account as well as any regulatory risks it may pose. Next, the extent of any promise in a data source that has been deemed employable is qualified on the basis of its potential to generated added-value in its own right or in combination with other sources. This result is essentially an outcome of the first level of analysis and the degree of usefulness determined, which allows the data sources to be finalized and then classified.

• Analyze the opportunities in the potential application

In order to analyze the opportunities offered by the various options for its application, an implementation path must be put in place. This is established by following a thorough analysis of infrastructure, IT and procurement needs and then raising the capability level of the teams. The purpose of the implementation path is, therefore, to properly quantify the work required in development, in order to then select the priority options for its application.

• Develop: define and put in place the technical means and people to carry out these experiments

Once the relevant options for application have been selected, the next step consists of detailed scenario-making using the various aspects of the company’s business model: marketing, legal, technical, data pathways, and impacts on management. This scenario-making will then set the scene for the experiment through the establishment of success criteria, a platform for experimentation, and validated mock datasets ready for tests to be carried out.

An illustration that summarizes this approach is shown below:

One of the main challenges in the examination of data is linked to the fact that some data will be of no interest on its own, but only when considered in correlation with one or more other data sets. Compared with working in silos, which is generally the case currently, this approach is based on the assumption that there is a desire to break with traditional operational models.

Beyond Big Data – Smart Data

Beyond Big Data, Smart Data is drawing nearer and offers a degree of pragmatism. The founding principle of the approach is essentially the idea of working with operators to build an approach that initially maximizes the exploitation of internal information assets, and then looks externally later, all the while ensuring customers’ data is protected. The aim is to harness the data and make use of it for the benefit of the company’s functions, its customers and the business in general. To do this, a Smart Data project must be focused and make a limited selection of pertinent options, from which the potential value of each set of data can be determined according to “test and learn” experimentation.

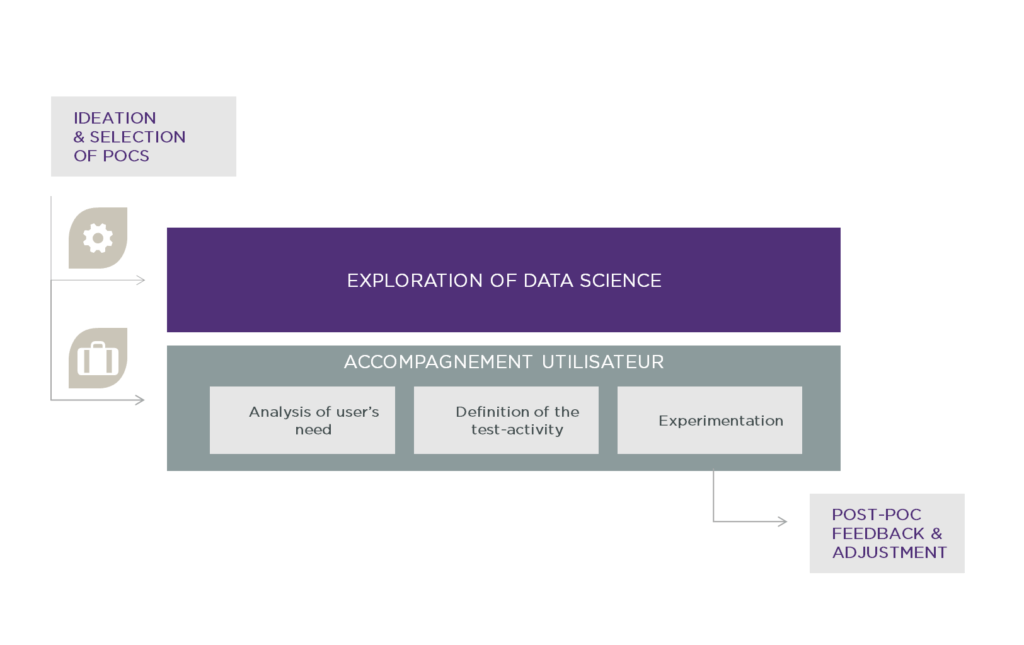

The Spirit of a smart POC

A Smart POC takes the form of a mini theoretical study followed by an experiment, often of a reduced size, with the purpose of either testing or confirming a hypothesis. The process follows a series of iterations that involves exploring the potential of available data and supporting its users. The initial step is to build a database and analyze its contents according to the needs expressed by the users. Next, the data is used in models, with prototypes being built before starting any experimentation, following which the prototypes are adjusted accordingly.

The next step, as shown below, is a series of technical and functional iterations. To do this, it is important to maintain flexibility by retaining a start-up mentality amongst teams and by anticipating the future in order to see the work yield results at full scale in the business.

Extracting value from data is certainly an issue of transformation for insurers; nevertheless, it is not merely a question of using a well-established process to deliver a certain degree of value. It is about conducting work for which pragmatism really is the watchword. The data sources are selected to meet the needs of players in the business, across the full range of functions, and using an agile approach. Iterations will allow them to develop concrete options for use while being able to adjust the selection of data sources.

Extracting value from data is certainly an issue of transformation for insurers; nevertheless, it is not merely a question of using a well-established process to deliver a certain degree of value. It is about conducting work for which pragmatism really is the watchword. The data sources are selected to meet the needs of players in the business, across the full range of functions, and using an agile approach. Iterations will allow them to develop concrete options for use while being able to adjust the selection of data sources.